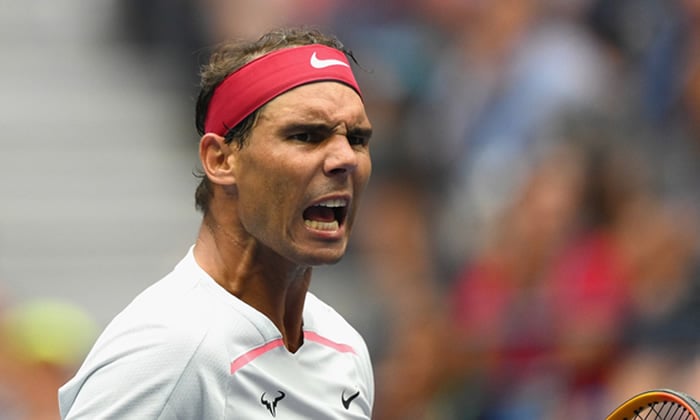

The Rise of AI-Generated Misinformation and Its Impact on Public Figures: The Case of Rafael Nadal

The rapid advancement of artificial intelligence (AI) has brought forth both exciting possibilities and concerning challenges. One such challenge is the potential for misuse of AI to create deepfakes, which are manipulated videos or audio recordings that can convincingly portray individuals saying or doing things they never did. These deepfakes pose a significant threat to individuals and society, as they can be used to spread misinformation, damage reputations, and even incite violence. A recent incident involving tennis legend Rafael Nadal highlights the growing concern over the unauthorized use of AI-generated likenesses for deceptive advertising and other malicious purposes.

In November 2024, following his retirement from professional tennis after representing Spain in the Davis Cup, Nadal discovered that AI-generated videos featuring a synthetic version of himself were circulating on various online platforms. These videos falsely attributed investment advice and promotional endorsements to Nadal, using a fabricated likeness that mimicked his image and voice. This unauthorized use of his persona constituted deceptive advertising, misleading viewers into believing that Nadal endorsed products or services he had no affiliation with. This incident underscores the vulnerability of public figures to AI-powered impersonation and the potential for such technology to be exploited for financial gain or other malicious intent.

The unauthorized use of Nadal’s AI-generated likeness raises several critical legal and ethical questions. From a legal standpoint, the creation and dissemination of deepfakes for commercial purposes without consent can constitute a violation of intellectual property rights, publicity rights, and consumer protection laws. Ethically, the use of deepfakes to deceive the public undermines trust in information sources and can have serious consequences for individuals and society. The lack of clear regulations and legal frameworks surrounding the creation and use of deepfakes poses a significant challenge in addressing this emerging threat.

The increasing accessibility of AI tools that can generate realistic deepfakes has made it easier for malicious actors to create convincing impersonations of public figures. This poses a substantial risk to individuals like Nadal, whose image and reputation can be tarnished by association with fabricated content. Furthermore, the widespread dissemination of deepfakes can erode public trust in online information, making it increasingly difficult to distinguish between authentic and manipulated content. This can have far-reaching consequences for democratic processes, public discourse, and the overall integrity of information ecosystems.

The Nadal incident highlights the urgent need for comprehensive legal frameworks and regulatory measures to address the challenges posed by AI-generated misinformation. These frameworks should include provisions for holding individuals and organizations accountable for the creation and dissemination of deepfakes for malicious purposes. Additionally, efforts should be made to develop robust technological solutions for detecting and mitigating the spread of deepfakes. Public awareness campaigns are also crucial to educate individuals about the existence and potential impact of deepfakes, empowering them to critically evaluate online information and identify potential manipulation.

The future of AI holds immense promise, but it also presents potential perils. The unauthorized use of AI-generated likenesses, as exemplified by the Nadal case, underscores the importance of proactively addressing the ethical and legal implications of this rapidly evolving technology. By developing robust legal frameworks, fostering technological innovation in deepfake detection, and promoting media literacy, we can mitigate the risks posed by AI-generated misinformation and ensure that this powerful technology is used responsibly and ethically. The protection of individual rights and the preservation of public trust in information are paramount in navigating the evolving landscape of AI and its societal impact.